The internet has a ton of valuable data, but it’s not always easy to grab in a usable format. We can use web scraping to pull data from websites that don’t make it easy to download. This gives us a way to analyze our competitors, monitor prices, track trends, and more.

In the previous article, we learned how to do Web Scraping using the Cheerio library. However, it has some limitations because it relies on the entire HTML content being available when we fetch the page. However, many applications are interactive and depend on JavaScript to display the data on the screen. To scrape them, we need to implement a different approach.

Playwright is a popular tool commonly used for End-to-end tests. However, its use is not restricted to testing.

If you want to know more about testing with Playwright, check out JavaScript testing #17. Introduction to End-to-End testing with Playwright

Playwright allows us to scrape the data from websites by opening them in an actual web browser. Thanks to this, we are not limited to scraping static content.

Running Playwright for Web Scraping

First, let’s install and set up Playwright.

|

1 |

npm init playwright@latest |

Let’s use Playwright to scrape a list of videos on YouTube for a given search query. The first step is to launch a browser and create a new, blank page.

scrape.ts

|

1 2 3 4 5 6 7 |

import { chromium } from '@playwright/test'; async function scrape() { const browser = await chromium.launch({ headless: false }); const page = await browser.newPage(); } |

Above, we avoid opening the page in the headless mode for testing purposes. This way we can see what the browser is doing.

Interacting with the page

Now, we can interact with the page and navigate to YouTube.

openYoutube.ts

|

1 2 3 4 5 |

import { Page } from '@playwright/test'; export async function openYoutube(page: Page) { await page.goto('https://www.youtube.com/'); } |

scrape.ts

|

1 2 3 4 5 6 7 8 9 |

import { chromium } from '@playwright/test'; async function scrape() { const browser = await chromium.launch({ headless: false }); const page = await browser.newPage(); await openYouTube(page); } |

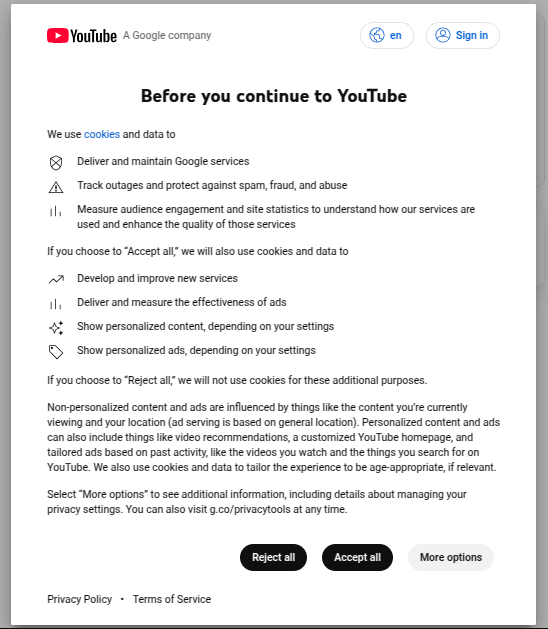

The first thing we notice is that YouTube opens a popup asking us about the cookies.

To close it, we can use the Locators API built into Playwright, which allows us to find elements on the page. To understand what element we’re looking for, we can navigate to the Developer Tools in the browser that Playwright opens. When we do that, we notice that the reject button looks like this:

|

1 2 3 4 5 |

<button> <div> <span>Reject all</span> </div> </button> |

The above is a simplified representation of the button without the included attributes.

We can use the above knowledge to find the necessary button and close it.

openYoutube.ts

|

1 2 3 4 5 6 7 8 |

import { Page } from '@playwright/test'; export async function openYoutube(page: Page) { await page.goto('https://www.youtube.com/'); // Reject cookies await page.locator('button:has-text("Reject all")').first().click(); } |

Similarly, we can use the Locators API to find videos using a given query.

searchForVideos.ts

|

1 2 3 4 5 6 7 |

import { Page } from '@playwright/test'; export async function searchForVideos(page: Page, searchQuery: string) { await page.locator('input[name="search_query"]').fill(searchQuery); await page.getByRole('button', { name: 'Search', exact: true }).click(); } |

scrape.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import { chromium } from '@playwright/test'; import { openYoutube } from './openYoutube'; import { searchForVideos } from './searchForVideos'; async function scrape() { const browser = await chromium.launch({ headless: false }); const page = await browser.newPage(); await openYoutube(page); await searchForVideos(page, 'Javascript'); } |

Scraping the data

To get the data of each video, we need to notice that each one is represented with an anchor.

|

1 2 3 4 5 6 7 8 |

<h3 class="title-and-badge"> <a href="/watch?v=EerdGm-ehJQ&pp=ygUKSmF2YXNjcmlwdA%3D%3D" title="JavaScript Tutorial Full Course - Beginner to Pro" > <!-- ... --> </a> </h3> |

When we perform an action on a single element, like clicking it, Playwright automatically waits for that element to appear and become actionable. However, when we want to work on multiple elements, Playwright doesn’t automatically wait for any of them to appear. Because of that, we should explicitly wait for the elements to appear.

getVideosData.ts

|

1 2 3 4 5 6 7 8 9 10 |

import { Page } from '@playwright/test'; export async function getVideosData(page: Page) { const allVideoAnchors = await page.locator('.title-and-badge a'); // Wait for the videos to appear await allVideoAnchors.first().waitFor(); // ... } |

Now, to get the data about each video, we need to use the evaluateAll method. It allows us to run a piece of code in the browser instead of Node.js to access the attributes of each element.

getVideosData.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 |

import { Page } from '@playwright/test'; export async function getVideosData(page: Page) { const allVideoAnchors = await page.locator('.title-and-badge a'); // Wait for the videos to appear await allVideoAnchors.first().waitFor(); return allVideoAnchors.evaluateAll((anchors) => { // ... }); } |

The last step is to create an array that contains the titles and URLs of each video.

getVideosData.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

import { Page } from '@playwright/test'; export async function getVideosData(page: Page) { const allVideoAnchors = await page.locator('.title-and-badge a'); // Wait for the videos to appear await allVideoAnchors.first().waitFor(); return allVideoAnchors.evaluateAll((anchors) => { return anchors.map((anchor) => { // We assume that every anchor should have the href and the title return { href: anchor.getAttribute('href') ?? '', title: anchor.getAttribute('title') ?? '', }; }); }); } |

scrape.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

import { chromium } from '@playwright/test'; import { openYoutube } from './openYoutube'; import { searchForVideos } from './searchForVideos'; import { getVideosData } from './getVideosData'; async function scrape() { const browser = await chromium.launch({ headless: false }); const page = await browser.newPage(); await openYoutube(page); await searchForVideos(page, 'Javascript'); const videosData = await getVideosData(page); console.log(videosData); } |

|

1 2 3 4 5 6 7 8 9 10 11 |

[ { href: '/watch?v=EerdGm-ehJQ&pp=ygUKSmF2YXNjcmlwdA%3D%3D', title: 'JavaScript Tutorial Full Course - Beginner to Pro' }, { href: '/watch?v=lkIFF4maKMU&pp=ygUKSmF2YXNjcmlwdA%3D%3D', title: '100+ JavaScript Concepts you Need to Know' }, ... ] |

Navigating to multiple pages

We can use Playwright to open multiple different pages concurrently. Let’s use that to get the details of each video. First, let’s modify our openYoutube function to handle various pages on YouTube.

openYoutube.ts

|

1 2 3 4 5 6 7 8 |

import { Page } from '@playwright/test'; export async function openYoutube(page: Page, subPath = '') { await page.goto(`https://www.youtube.com/${subPath}`); // Reject cookies await page.locator('button:has-text("Reject all")').first().click(); } |

So far, we have the information about each video in an array. It contains the title and URL of each video.

scrape.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

import { chromium } from '@playwright/test'; import { openYoutube } from './openYoutube'; import { searchForVideos } from './searchForVideos'; import { getVideosData } from './getVideosData'; async function scrape() { const browser = await chromium.launch({ headless: false }); const page = await browser.newPage(); await openYoutube(page); await searchForVideos(page, 'Javascript'); const videosData = await getVideosData(page); // ... } |

Let’s create a function that navigates to each video to get more detailed information. What’s crucial is that we can do that in parallel by combining Promise.all and the map function.

getDetailedVideosData.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

import { Browser } from '@playwright/test'; import { openYoutube } from './openYoutube'; interface VideoData { href: string; title: string; } async function getSingleVideoData(browser: Browser, data: VideoData) { // ... } export async function getDetailedVideosData( browser: Browser, videosData: VideoData[], ) { return Promise.all( videosData.map((data) => { return getSingleVideoData(browser, data); }), ); } |

We open a new page for each video and use the Locators API to gather additional data.

getDetailedVideosData.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

import { Browser } from '@playwright/test'; import { openYoutube } from './openYoutube'; interface VideoData { href: string; title: string; } async function getSingleVideoData(browser: Browser, data: VideoData) { const page = await browser.newPage(); await openYoutube(page, data.href); const numberOfLikes = await page .locator('button[title="I like this"]') .first() .textContent(); return { title: data.title, href: data.href, numberOfLikes, }; } // ... |

We must also remember to close the browser when it is not needed anymore.

scrap.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

import { chromium } from '@playwright/test'; import { openYoutube } from './openYoutube'; import { searchForVideos } from './searchForVideos'; import { getVideosData } from './getVideosData'; import { getDetailedVideosData } from './getDetailedVideosData'; async function scrape() { const browser = await chromium.launch({ headless: false }); const page = await browser.newPage(); await openYoutube(page); await searchForVideos(page, 'Javascript'); const videosData = await getVideosData(page); const result = await getDetailedVideosData(browser, videosData); await browser.close(); return result; } |

Summary

In this article, we used the Playwright tool to scrape the data from YouTube. While this might be more resource-heavy than using libraries such as Cheerio, it has a lot of benefits. By operating within a real browser environment, we’re not limited to scraping static websites and can handle dynamic, JavaScript-driven content. Overall, using Playwright is a solid approach for scraping both static and dynamic websites, allowing us to analyze the content of various applications.

Interesting take. Thanks for sharing!