The internet is filled with vast amounts of valuable data. However, much of the information isn’t available in a structured format we can download and use. To handle that, we can use web scraping. Web scraping is a process of extracting data from websites that don’t provide it in a structured format out of the box, for example, through a REST API. Typically, it involves fetching a web page and analyzing its content programmatically.

Such data can be used for monitoring prices, analyzing competitors, tracking trends, and more. While this information is often publicly available on the internet, scraping it may still violate the Terms of Service of some websites. Therefore, you should proceed with caution.

Scraping using Cheerio

One approach to scraping we can use is to simply fetch the raw HTML content of a website and parse it using the Cheerio library. The Cheerio library has an intuitive set of functions that mimic jQuery.

Fetching and parsing the page

First, we need to fetch the contents of a web page and parse it with Cheerio. To test it, let’s use the JavaScript page on Wikipedia.

fetchWebsiteContent.ts

|

1 2 3 4 5 6 7 8 |

import { load } from 'cheerio'; export async function fetchWebsiteContent(url: string) { const response = await fetch(url); const content = await response.text(); return load(content); } |

To use the above function, we must provide the URL of the page we want to fetch.

index.ts

|

1 |

fetchWebsiteContent('https://en.wikipedia.org/wiki/JavaScript'); |

Reading the contents of the page

When we look at the JavaScript page on Wikipedia, we can see that it lists various information about JavaScript.

To scrape it, we must look closer at the website’s HTML. First, let’s focus on the name of the language.

|

1 2 3 |

<caption class="infobox-title summary"> JavaScript </caption> |

Looks like we need to find an element with the infobox-title.

scrapeProgrammingLanguageInformation.ts

|

1 2 3 4 5 6 7 8 9 10 11 |

import { fetchWebsiteContent } from './fetchWebsiteContent'; async function scrapeProgrammingLanguageInformation(url: string) { const $ = await fetchWebsiteContent(url); const title = $('.infobox-title').text(); return { title, }; } |

When using Cheerio, we typically use the $ variable name to mimic the jQuery API.

Above, we look through the page and find the element with the infobox-title class. Then, we retrieve its text content.

More advanced queries

Now, let’s find the designer of the JavaScript language. When we take a look at the HTML from Wikipedia, we can see that it is stored in a table row.

|

1 2 3 4 5 6 7 8 9 10 11 |

<tr> <th scope="row" class="infobox-label"> <a href="/wiki/Software_design" title="Software design">Designed by</a> </th> <td class="infobox-data"> <a href="/wiki/Brendan_Eich" title="Brendan Eich">Brendan Eich</a> of <a href="/wiki/Netscape" title="Netscape">Netscape</a> initially; others have also contributed to the <a href="/wiki/ECMAScript" title="ECMAScript">ECMAScript</a> standard </td> </tr> |

To find out who designed the language, we can:

- Find the anchor element looking for the

Designed by content.

1<a href="/wiki/Software_design" title="Software design">Designed by</a> - Look for its closest

<th> ancestor.

123<th scope="row" class="infobox-label"><!-- ... --></th> - Finds it’s closest

<td> sibling.

123<td class="infobox-data"><!-- ... --></td> - Extract the content from <td>.

When doing that, we need to watch out for the hard space – – included in the title.

scrapeProgrammingLanguageInformation.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

import { fetchWebsiteContent } from './fetchWebsiteContent'; async function scrapeProgrammingLanguageInformation(url: string) { const $ = await fetchWebsiteContent(url); const title = $('.infobox-title').text(); const designedBy = $('a:contains("Designed\u00A0by")') .closest('th') .next('td') .text(); return { title, designedBy, }; } |

We need to do similar operations to extract the date of the first appearance and the current stable release.

scrapeProgrammingLanguageInformation.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

import { fetchWebsiteContent } from './fetchWebsiteContent'; async function scrapeProgrammingLanguageInformation(url: string) { const $ = await fetchWebsiteContent(url); const title = $('.infobox-title').text(); const designedBy = $('a:contains("Designed\u00A0by")') .closest('th') .next('td') .text(); const firstAppearance = $('th:contains("First\u00A0appeared")') .closest('th') .next('td') .text(); const stableRelease = $('a:contains("Stable release")') .closest('th') .next('td') .text(); return { title, designedBy, firstAppearance, stableRelease, }; } |

Now, we can use our function to fetch the data about a particular programming language.

|

1 2 3 4 5 |

const data = await scrapeProgrammingLanguageInformation( 'https://en.wikipedia.org/wiki/JavaScript', ) console.log(data); |

|

1 2 3 4 5 6 |

{ title: 'JavaScript', designedBy: 'Brendan Eich of Netscape initially; others have also contributed to the ECMAScript standard', firstAppearance: '4 December 1995; 29 years ago (1995-12-04)[1]', stableRelease: 'ECMAScript 2024[2] \n / June 2024; 8 months ago (June 2024)' } |

This can work for other programming languages on Wikipedia.

|

1 2 3 4 5 |

const data = await scrapeProgrammingLanguageInformation( 'https://en.wikipedia.org/wiki/TypeScript', ) console.log(data); |

However, the crucial thing is that our code needs to be tailored to handle the specific HTML. For example, if Wikipedia changes the infobox-title class name to something else, our code won’t work anymore.

Unfortunately, Wikipedia is not necessarily consistent. For example, the page for Bash does not have the “Designed by” section. Instead, it has the “Original author” and “Developer” sections.

Fetching multiple pages

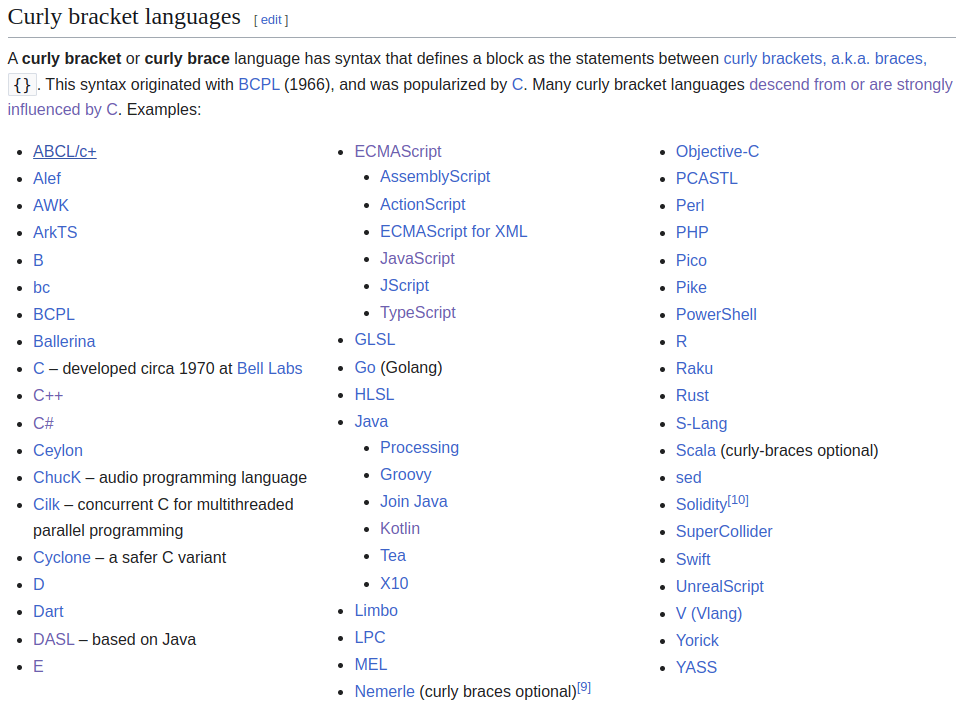

We can use Cheerio to traverse multiple pages by following anchor – <a> – elements. For example, let’s fetch the information about all curly bracket languages.

To do that, we first need to fetch the page that contains the URLs of the pages we want to scrape.

fetchMultipleProgrammingLanguagesData.ts

|

1 2 3 4 5 6 7 8 9 10 |

import { fetchWebsiteContent } from './fetchWebsiteContent'; async function fetchMultipleProgrammingLanguagesData() { const listUrl = 'https://en.wikipedia.org/wiki/List_of_programming_languages_by_type'; const $ = await fetchWebsiteContent(listUrl); // ... } |

Now, we need to create an array of URLs where each one points to a page about a particular programming language.

fetchMultipleProgrammingLanguagesData.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

import { fetchWebsiteContent } from './fetchWebsiteContent'; import { load } from 'cheerio'; async function fetchMultipleProgrammingLanguagesData() { const listUrl = 'https://en.wikipedia.org/wiki/List_of_programming_languages_by_type'; const $ = await fetchWebsiteContent(listUrl); const curlyLanguagesItems = $('#Curly_bracket_languages') .closest('div.mw-heading') .nextAll('.div-col') .first() .find('li'); const urls = curlyLanguagesItems.toArray().map((element) => { const anchor = load(element)('a'); return anchor.attr('href'); }); // ... } |

Now, we should fetch the information about every programming language. To do that in parallel, we need to use the Promise.all function.

fetchMultipleProgrammingLanguagesData.ts

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

import { fetchWebsiteContent } from './fetchWebsiteContent'; import { load } from 'cheerio'; import { scrapeProgrammingLanguageInformation } from './scrapeProgrammingLanguageInformation'; async function fetchMultipleProgrammingLanguagesData() { const listUrl = 'https://en.wikipedia.org/wiki/List_of_programming_languages_by_type'; const $ = await fetchWebsiteContent(listUrl); const curlyLanguagesItems = $('#Curly_bracket_languages') .closest('div.mw-heading') .nextAll('.div-col') .first() .find('li'); const urls = curlyLanguagesItems.toArray().map((element) => { const anchor = load(element)('a'); return anchor.attr('href'); }); return Promise.all( urls.map((url) => { if (url) { return scrapeProgrammingLanguageInformation( `https://en.wikipedia.org${url}`, ); } }), ); } |

With this approach, we can scrape multiple pages at once.

|

1 2 3 |

const data = await fetchMultipleProgrammingLanguagesData(); console.log(data); |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

[ { title: '', designedBy: '', firstAppearance: '', stableRelease: '' }, { title: 'Alef', designedBy: 'Phil Winterbottom', firstAppearance: '1992; 33 years ago (1992)', stableRelease: '' }, { title: 'AWK', designedBy: 'Alfred Aho, Peter Weinberger, and Brian Kernighan', firstAppearance: '1977; 48 years ago (1977)', stableRelease: 'IEEE Std 1003.1-2008 (POSIX) / 1985\n ' }, { title: 'ArkTS', designedBy: '', firstAppearance: 'September 30, 2021; 3 years ago (2021-09-30)', stableRelease: '5.0.0.71\n / September 29, 2024; 4 months ago (2024-09-29)' }, { title: 'B', designedBy: 'Ken Thompson', firstAppearance: '1969; 56 years ago (1969)[1]', stableRelease: '' }, ... ] |

It’s crucial to keep in mind that not every Wikipedia page follows the same HTML structure. For example, the first item on the list doesn’t have the data we’re looking for, and we’re not handling it gracefully.

Summary

In this article, we’ve explored using the Cheerio library for web scraping. To do that, we first fetched the HTML contents of the page and then parsed them using Cheerio.

Cheerio is a great tool for web scraping in many cases, but it does have some limitations. The key drawback is that it relies on the full HTML content being available when we fetch the page. However, a lot of websites are interactive and depend on JavaScript to display the data on the screen. Since Cheerio doesn’t execute JavaScript, it can’t scrape content that is rendered on the client side. If we want to do that, we should use tools such as Playwright.

Cheerio is still a great choice for web scraping if we aim for simplicity and don’t need to deal with dynamic content.